- Aclara Technologies Blog

- How Machine Learning Is Revolutionizing Utility Operations

How Machine Learning Is Revolutionizing Utility Operations

Written by Dr. David Rieken on February 25, 2020

Machine learning has changed our daily lives for the better. And it is doing the same for utilities that use data to improve operations.

At one point during the recent DistribuTECH 2020 conference, as I was attending talk after talk about how utilities are trying to move the energy industry into the future, it occurred to me that in many ways the future is already here. Had I been attending this conference 20 years ago I would have had to print out paper airline tickets, MapQuest directions from the airport to the hotel, the entire DistribuTECH agenda, attendee list, hotel confirmation numbers, and any technical notes I might need, and filled my backpack with paper before I left. I probably could have sent an email or two, if I had taken what would now seem like a very large laptop. But that wouldn’t have happened until I got to the hotel, assuming the hotel had an Ethernet connection. Maybe I could have printed out something had I gone to the hotel business center. Communication with my staff back in St. Louis would have been arduous and thus infrequent and necessarily deliberate.

Follow the trends. Subscribe to our blog.

Instead, I managed to get through the entire show without a single piece of printed paper (unless you count the badge I was given at registration). The only items I took with me were my Android smart phone and my iPad. With just those two items I was able to stay in constant touch with my wife, my colleagues at the show, and the Aclara research staff back at the office. I was able to scan marketing literature with my phone and archive it in my online library, which, by the way, was available to me in its entirety throughout the entire trip. I was able to board the plane without paper. I was able to summon a car to take me from the airport to the hotel and back, and I was able to track the locations of both while I was waiting and in transit. Any receipts I needed for expense reporting were scanned and immediately thrown away.

If I had a dedicated personal assistant working for me 20 years ago, I still would not have been able to do all these things as easily as I did last week. In fact, I do have a dedicated personal assistant working for me now, in the form of my phone, my iPad, and the many apps designed to make life easier. The symbiotic relationship between me and my robot assistant has made me into an amalgam (some might say a sort of cyborg) that is far more productive than my counterpart of two decades ago. Most science fiction of the last century did not even predict that this would happen.

Based on what I saw at DistribuTECH, utilities, it would seem, are undergoing the same transformation.

Machine learning augments, rather than replaces, human thinking

Two centuries ago, the industrial revolution was beginning to take hold in England. Continental Europe was dealing with the aftermath of the French Revolution, specifically in the form of the military strongman, Napoleon Bonaparte, and Great Britain found itself politically and economically isolated. At the peak of its power, France forced the bulk of mainland Europe into what would today be called an embargo of the British Empire. Poverty and unemployment in England increased and many in the working class began to lose patience.

The term Luddite, named after the famous textile worker rebellions of the 19th century, is synonymous with movements that fight against technological progress.

Textile workers in particular, began to look at the new factories, which were replacing the older mills that had employed skilled workers, as the problem. Some of them took to violence as mobs of angry workers smashed textile machinery in protest. For reasons lost to history this became known as the Luddite rebellions.

Despite eventually being pacified by the British army, the Luddites never really left us. For every new technology there is a voice decrying that technology as a potential job-killer. Throughout the information age there have been warnings that our increasing dependence on technology would put us out of work. Some issue even more dire warnings and insist that we are imbuing our machines with such intelligence that someday, ostensibly soon, they will become more intelligent than us and perhaps decide that they no longer need (or want) us around.

However, in two centuries the predictions of doom have not materialized. Rather, as the sophistication of our machines has increased, new opportunities have been created. A few decades ago, to work was to produce something physical, something tangible that could be held in your hand and had some intrinsic value in its existence by itself. Now, much of what we produce exists only as a sequence of ones and zeros stored on a silicon chip.

A huge economy has arisen around flipping bits in a computer: creative types record music, film and edit video, or write literature on silicon-powered laptops or tablets. Engineers design and document systems on desktop computers long before they are ever built. Businessmen spend their time composing reports in excel and documenting them in PowerPoint. As I write this on Microsoft Word, I am, fundamentally, constructing a binary file on one of Microsoft’s cloud servers somewhere. While some jobs have undoubtedly been anachronized, new jobs have been created.

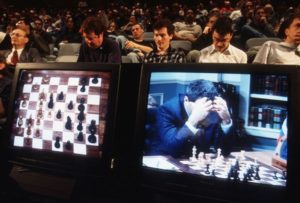

Grandmaster Garry Kasparov, arguably one of the greatest chess champions of all time, famously lost to a computer in 1997.

Garry Kasparov, a chess grandmaster and former world chess champion, may have cause to feel about artificial intelligence as the Luddites did about textile mills. In 1997 he infamously became the first reigning world chess champion to be defeated by a computer. Despite being one of the most famous people to lose their job to a machine learning program, though, Kasparov is surprisingly optimistic about the future we will share with artificial intelligence.

A few years after his loss, he took part in what he called the Advanced Chess Experiment. As part of the experiment a chess tournament was convened in which three types of teams could compete: teams consisting of only human players, teams consisting of only chess-playing software, and teams consisting of human players assisted by chess-playing software. In these tournaments the human/software teams consistently beat out the teams of only humans and only software. To Kasparov the message is clear: what humans do well is very different from what machines do well. Human thinking assisted by machine thinking creates a formidable hybrid that is better than the sum of its parts.

Utilities are just starting to learn what machine learning can do for them

Back to DistribuTECH. Judging from the presentations at the summit sessions and in the exhibition booths, many utilities are not only learning this lesson but taking it seriously to heart. Although it was not possible to attend all the talks, and my own sample set is likely biased, the theme of the show could easily have been on the role of analytics in modern utility operation. Most large utilities now appear to have at least some data analytics or data science group on the roster and those groups were anxious to show off what they have been up to. The results ranged from the mundane to the ambitious.

Utilities can use software algorithms to predict when to replace assets such as utility poles

Several utilities have begun using machine learning to predict which of their assets are on the verge of failure. And whereas modern Luddites would have maintenance crews regularly visit all the assets and manually inspect them, utility operators will tell you that this is time-consuming and therefore expensive. It’s also a waste of the crew’s time since most of the assets are not failing.

One presentation from Exelon showed how a data science team is predicting which poles need to be replaced. The analysts take into consideration the age of the pole, the type of soil that the pole is mounted in, and stresses that the pole has been subjected to over its lifetime such as its age. From this information, the utility claims it can steer crews to the poles that need attention with a surprisingly high success rate, freeing up those crews for more urgent tasks without sacrificing the network’s integrity.

Other utilities are having similar successes predicting the remaining useful life in electrical assets such as distribution transformers. There was also some work presented on how some utilities are preparing for incoming storms by analyzing weather data. By predicting which assets are likely to be affected, crews can more efficiently prepare to restore power, and, perhaps, some outages can be averted.

There were many more examples. Cameras are being used to monitor the blades on windmills for stress. Image processing algorithms detect when the blade needs to be replaced without the need for constant inspection by a human. Maps are being used to determine which houses receive the most sunlight at the correct angle and are thus good candidates for solar panels. Disaggregation models are being used to determine how much current a solar panel feeds back into the network and which appliances are consuming excessive current. There was even some mention of automatically correcting network topology errors and determining the impedance of distribution lines.

In some cases, machines are being trained to operate networks with minimal human oversight. On microgrids and in large buildings, some utilities are showing how control devices can be switched automatically to enable optimal control. HVAC control, in particular, seems to be the target of many of these research efforts. It may seem small, but this is how research gets off the ground. I found myself wondering how long it will be before we see a presentation at DistribuTECH about how capacitor banks and voltage regulators are being operated by machine intelligence.

Data is the commodity of the future

What is really driving this revolution in analytics and artificial intelligence is the overwhelming preponderance of data that has become available to utilities. A constant theme at the conference was that utilities are drowning in data. It is now widely recognized that the advanced metering infrastructure (AMI) data that utilities have collected for years is more valuable than it was originally thought. Timely consumption data provides vital information into the flow of power through a network.

Add to that the many SCADA sensors, line sensors, control devices, and even social media (one presenter I saw claimed he could get outage data from watching his twitter feed!) and the trickle of data becomes a deluge. But it gets worse: sensors once reserved for the transmission network are now appearing on the distribution network. Some utilities are experimenting with phasor measurement units, or PMUs, that are capable of streaming sampled voltage and current waveforms at about 100 kbps. With all this data available, only a machine can siphon through all the garbage to find the nuggets of useful information.

One of the most interesting presentations to me was from Southern California Edison, which has started experimenting with state estimation on its distribution network. State estimation, closely related to power flow calculation, is an attempt to determine the voltage and current throughout the entire network. It is an essential part of transmission network operation but has long been considered too expensive or computationally prohibitive to do on distribution networks. With distributed energy resources becoming increasingly pervasive, though, many seem to be realizing that it will soon be an essential part of distribution network management as well. The work is clearly in its infancy, but it is the obvious next step to exploiting the voluminous amount of electrical data that is available now. I expect we will see much more of this in the near future.

I left DistribuTECH 2020 with the feeling that this is a very good time for research engineers such as myself to be in the utility industry. Long considered a slow-moving and commoditized industry, it now seems to be in a sort of renaissance. Aclara research is watching this revival with great interest.

At Aclara, we have identified three areas of research critical to advancing the state of the art in electric distribution: sensors, analytics, and communications.

- Our sensors research group is actively exploring new sensors to collect data from the network, and at methods for squeezing even more data out of the sensors that we presently offer.

- The analytics research group is looking at how we can augment a utility’s analytics efforts by intelligently combining the data from the many sensors we offer to provide new insights into how the distribution network is functioning.

- The communications research group is constantly looking at our communications networks to make sure they can handle the large amount of data that will have to be passed around, because software is useless if the data is not available for processing by the algorithms the analytics team invents.

Looking at the world we live in now as I imagine I would have 20 years ago; I am amazed at how computing technology has made our lives better. I wonder what the world will look like 20 years from now. Rather than fear the future and what our technology may unleash, I am excited about it and excited to have my own small role in shaping it.

Browse Topics

- Aclara News (6)

- AclaraConnect (6)

- AclaraONE (12)

- AMI deployments (19)

- Case Studies (1)

- Climate Challenges (2)

- Data analytics (19)

- Distribution Automation (12)

- Electric utilities (43)

- Fault detection and location (3)

- featured (31)

- Gas utilities (6)

- Grid monitoring (20)

- Leak detection (7)

- Load control (2)

- Non revenue water (7)

- Power Line Communications (6)

- Power quality (2)

- Renewable energy (5)

- Smart metering (3)

- Water utilities (16)

- Water Utility Solutions (2)

Subscribe

Sign me up to receive the latest posts from the Aclara Technologies Blog to my email.